5 Must-Have Features for LLM Evaluation Frameworks

Large Language Models (LLMs) are revolutionizing the way we interact with technology. From chatbots that facilitate customer interactions to automating repetitive tasks and generating code, LLMs are increasingly being used in industries as diverse as healthcare, finance, and retail. A particularly notable aspect of LLMs is their ability to rapidly build functional prototypes, often within minutes or hours. A key factor contributing to this accelerated development process is the intuitive nature of the prompt-based interaction between humans and LLMs. As a result, there has been an explosion of innovative LLM-based prototypes, demonstrating the versatility and potential of these models to transform various industries.

From Prototype to Production: The Challenge of Deploying LLMs

However, moving from prototype to reliable production remains a significant challenge with LLMs. Unlike traditional software applications, LLMs are non-deterministic, meaning that they can produce different outputs for the same input when run multiple times. In addition, LLMs are prone to "hallucination," producing text that appears plausible but is factually incorrect. The complexity of LLMs can make it difficult to identify and quantify their problems. This unpredictability, coupled with the black-box nature of LLMs, can lead to hesitation among organizations considering their deployment.

LLMs must be evaluated

A critical aspect of addressing this issue is ensuring consistent performance and behavior throughout the lifecycle of the model. Traditional software applications typically undergo rigorous testing to identify bugs, optimize functionality, and improve overall stability. While LLMs cannot be tested in the same way as regular code due to their non-determistic nature, automated evaluation of LLM systems is critical to confidently integrating LLMs into production environments. That's where an LLM evaluation framework comes in.

What is an LLM Evaluation Framework?

An LLM evaluation framework is a systematic approach to evaluating the performance and capabilities of LLMs by testing their outputs on a range of different criteria. By establishing transparent metrics for measuring performance and providing actionable insights into areas for optimization, such a framework can help assess model performance, identify and mitigate potential problems, and ultimately build confidence among organizations in deploying LLMs effectively.

Key Features of an Effective LLM Evaluation Framework

Numerous frameworks have emerged to address LLM evaluation. When choosing one or building one from scratch, consider the following key features of an effective evaluation framework:

1. Providing Human-Centric User Experience (UX)

An effective LLM evaluation framework places the human user at the center, using the principles of human-centered design. Rather than prioritizing the LLM itself, the framework is tailored to the needs and capabilities of the domain experts responsible for the evaluation process. This ensures that the framework facilitates seamless collaboration between AI and human experts, augmenting human capabilities with AI assistance while preserving human judgment and decision making. A human-centered UX evaluation framework ensures that the evaluation process does not rely solely on quantitative metrics, but also incorporates qualitative assessments and expert judgment. Examples of human-centered UX principles for LLM evaluation frameworks include:

- Collaborative decision-making: Allow humans to apply their expertise and intuition, complemented by the precision and analytical power of AI tools.

- Preserve expert judgment: Ensure that the framework respects and enhances the critical thinking and judgment of human evaluators.

- Accessible design: Provide clear instructions, concise visualizations, and easy-to-interpret results for domain experts with varying levels of technical expertise.

2. Enabling Model Grounding with Verified Facts

An effective LLM evaluation framework enables the process of "grounding" an LLM. LLM grounding is the process of feeding an LLM with verified domain-specific facts, enabling it to better understand and respond to queries in a more accurate and contextually relevant manner. By integrating external datasets and knowledge bases, LLMs understand industry and organization-specific nuances, terminology, and concepts beyond their initial general training. This results in more reliable, accurate, and tailored responses based on real-world information relevant to a particular domain or dataset.

The figure below visualizes the LLM grounding process for enriching a prompt (user query) with "grounded" facts before triggering a model response from the LLM (in this case, an OpenAI model).

3. Enabling LLM Hyperparameter Tuning

An effective LLM evaluation framework assists teams in tuning LLM hyperparameters, a process that can significantly affect the behavior and performance of an LLM. LLM hyperparameters represent configurations of an LLM that determine how an LLM learns and produces outputs. They are essentially the control knobs of an LLM, controlling aspects such as randomness, diversity, and determinism. Examples of LLM hyperparameters are:

- Temperature: Temperature controls the randomness of an LLM's output, with a high temperature producing more unpredictable and creative results, and a low temperature producing more deterministic and conservative output.

- Top P: Top p controls the diversity of the generated text by selecting words from the smallest possible set whose cumulative probability is greater than or equal to a specified threshold p.

- Maximum Length: The maximum length of an LLM system's response, specifically the maximum number of output tokens.

4. Creating and Testing Model Evaluations (evals)

An effective LLM evaluation framework provides the ability to easily create and test model evaluations (evals), ideally in an automated fashion. Evals are like unit tests for LLMs. They evaluate the performance of an LLM by analyzing the output produced along various dimensions, such as format, grammar, tone, or fluency. Effective evals cover diverse test scenarios, are computationally efficient to run, and test critical aspects of the LLM to ensure a reliable user experience. While evals are best defined by subject-matter experts (SMEs), the grading of evals can be done by either humans or AI:

- Human-graded evals: Human experts evaluate the output of the LLM. While thorough, human evaluation can be time-consuming and expensive.

- Model-graded evals: A specially trained model evaluates the output of the LLM, essentially using AI to grade AI. This provides efficiency, consistency, and easy reproducibility of scores. Using model-graded evals allows SMEs to focus more on complex edge cases.

5. Managing Costs and Response Times

An ideal LLM evaluation framework facilitates the management of cost and response time (also called latency) during the iterative development and testing of LLMs. Specific features that an LLM evaluation framework can provide include:

- Cost monitoring and reporting: Real-time monitoring of the costs associated with the evaluation process, such as the automatic execution of model-graded evals. Monitoring both cost and evaluation performance is essential to ensure that budget constraints are met without compromising the quality of the LLM.

- Flexible model selection: Allowing the user to switch between different LLMs based on the complexity of the task and the desired speed of response. For example, less demanding tasks can be routed through smaller, more efficient models (such as GPT-3.5 Turbo) to reduce cost and latency.

- Performance metrics and alerts: Tracking key performance indicators such as latency, token count, and cost per request can provide insights into the efficiency of LLM operations. Alerts can be configured to notify teams of potential problems or inefficiencies, enabling proactive management.

By providing these capabilities, an LLM assessment framework not only helps manage cost and latency, but also improves the overall efficiency and effectiveness of LLM deployment and maintenance.

Introducing Scorecard: An End-to-End LLM Evaluation Framework

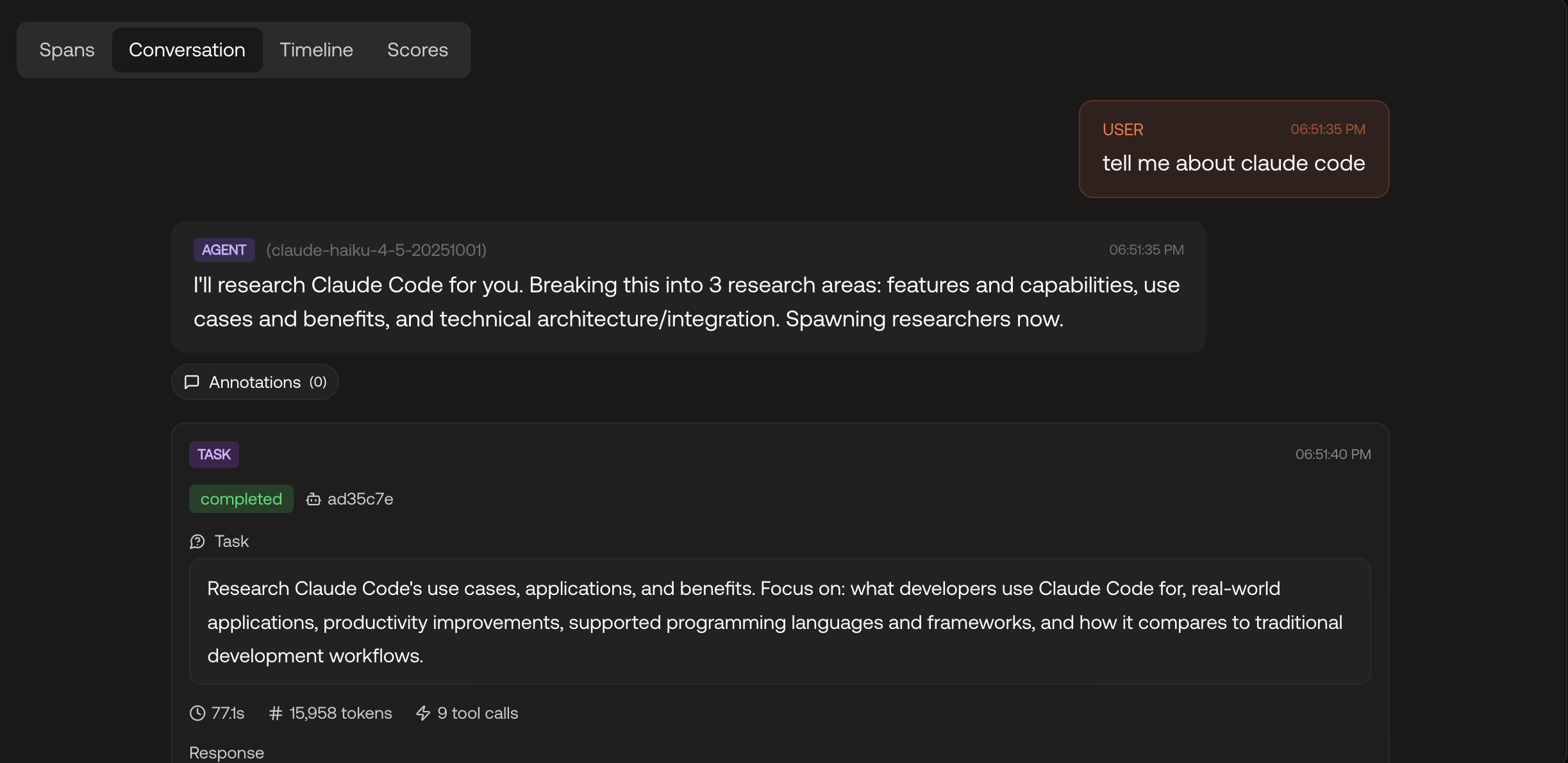

Scorecard is an end-to-end evaluation toolkit that facilitates automated LLM evaluation. It streamlines the path from prototype to production in minutes by providing features such as:

- Prompt Management: Consolidation, versioning, and tracking of prompts.

- Tracing & Logging: Out-of-the-box tracing and logging of important LLM activities.

- Privacy by Design: Built-in privacy and SOC 2 compliance.

- Automated Scoring: Automated, model-graded testing based on SME expertise.

- Best-in-Class Metrics: Customizable metrics based on expertise of LLM experts.

- Testset Management: All-in-one overview and management of created Testsets.

- A/B Comparison: Side-by-side comparison of evaluation runs with different specifications.

- AI Guardrails: Safeguarded LLM conversations by controlling LLM responses.

- Run Inspection: Detailed break-down of results for individual evaluation runs.

- AI Proxy: Flexible LLM management by easily changing underlying models.

With these features, Scorecard enables faster deployments, improved user experience, and ultimately increased confidence in LLM applications through comprehensive testing.

Conclusion: Using an LLM Evaluation Framework Increases Competitive Advantage

Overall, the ideal LLM evaluation framework provides a human-centric UX, enables model grounding and LLM hyperparameter tuning, offers eval creation and testing, and facilitates cost and latency management. Ultimately, an LLM evaluation framework like Scorecard enables you to achieve high-performing LLM applications, resulting in superior user experiences, happier customers, and a strong competitive advantage.

Get started with Scorecard today and move from prototype to production in no time. Contact us to find out how you can implement Scorecard with your existing LLM application.

References

Cisco, 2023. Cisco AI Readiness Index: Intentions Outpacing Abilities. San Jose, CA.

OpenAI (2023) A Survey of Techniques for Maximizing LLM Performance. 13 November. Available at: https://www.youtube.com/watch?v=ahnGLM-RC1Y&t=333s (Accessed: 29 April 2024).

OpenAI (2023) The New Stack and Ops for AI. 13 November. Available at: https://www.youtube.com/watch?v=XGJNo8TpuVA (Accessed: 29 April 2024).

World Economic Forum, 2023. Future of Jobs Report 2023, Insight Report. Geneva.